Test Your Agent

Make sure your agent is behaving as expected.

Overview

In this article, we cover testing your AI agent.

This article is part of a series covering setting up and deploying AI agents. See the other parts in the series:

- Create an AI Agent

- Write a Behavior Description (Prompt)

- Create Actions and Tools

- Add Your Knowledge Center

- Add Input Parameters

- Check Your Agent

- Test Your Agent - this article

- Integrate Into a Flow

- Use Multiple Agents

Testing Your Agent

Important:Testing new agents is mandatory!

AI agents are like people. They can give different answers to the same questions based on the instructions and information they're provided. The only way to know what results you're going to get is to test.

Just like you wouldn't hire an employee without an interview, you shouldn't release a new agent without testing.

When you're done building and checking your AI agent, it's important to run some tests and make sure it's answering questions and taking actions the way you expect.

This is also the time to think up and try out any edge cases you can think of, to confirm whether your agent responds appropriately.

Testing can be done in two ways, and we recommend using both options every time you build a new agent. Each option tests different aspects of your agent, so it's important to perform both.

Using the Test Agent Button

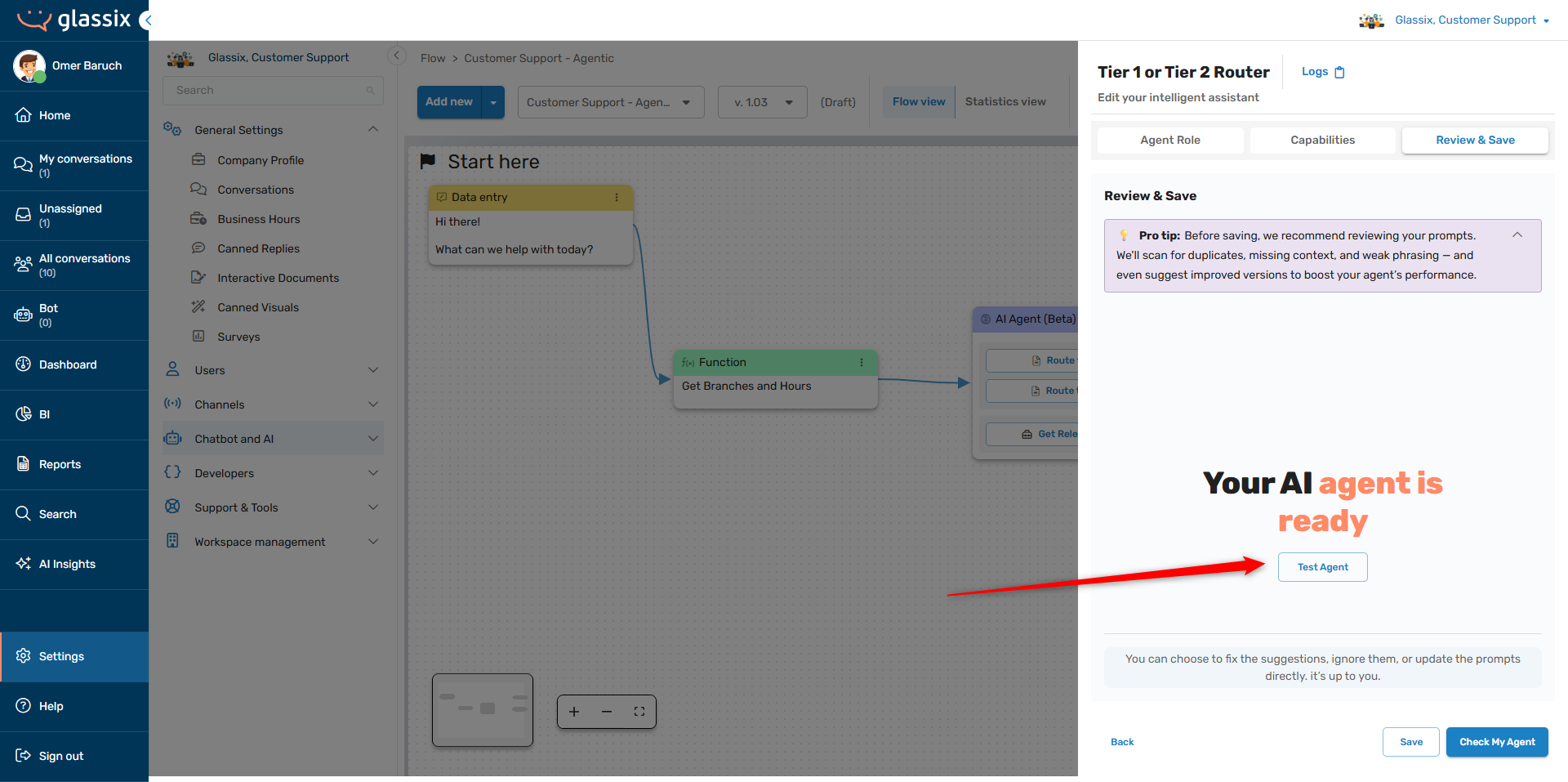

On the last page of the AI agent builder, you will find the Test Agent button:

The Test Agent button takes you to the AI agent tester, where you'll be able to check your agent's responses without context, see the direct output of your agent in any actions and tools, and test with specifically defined input data.

Testing Your Agent Without Context

The AI agent tester automatically starts a conversation with your agent that has no conversational context, meaning that your agent will answer questions based solely on the instructions you've provided and not based on any previous conversation (like it would within a flow).

Info:The AI agent tester still allows your agent to access any Knowledge Center categories you've allowed it to use. This means that you can use the tester to check your agent's responses based on Knowledge Center content.

This is useful for making sure that your agent understands the instructions it's been provided, and is also helpful for troubleshooting:

-

If your agent provides correct responses in the AI agent tester, but gets confused or provides incorrect responses within a flow, this indicates that your agent is getting confused by the previous conversation in the flow or by one of its input parameters.

Try providing your agent with specific messages or input parameters from your flow and see how it responds.

-

If your agent does not provide correct responses in the AI agent tester, this indicates that there is an issue with its behavior description prompt or with one of its actions or tools.

Check and make sure there are no conflicts in the behavior description prompt, or run the Check Your Agent tool again to find any problems.

If you want to test your agent with specific inputs, see below.

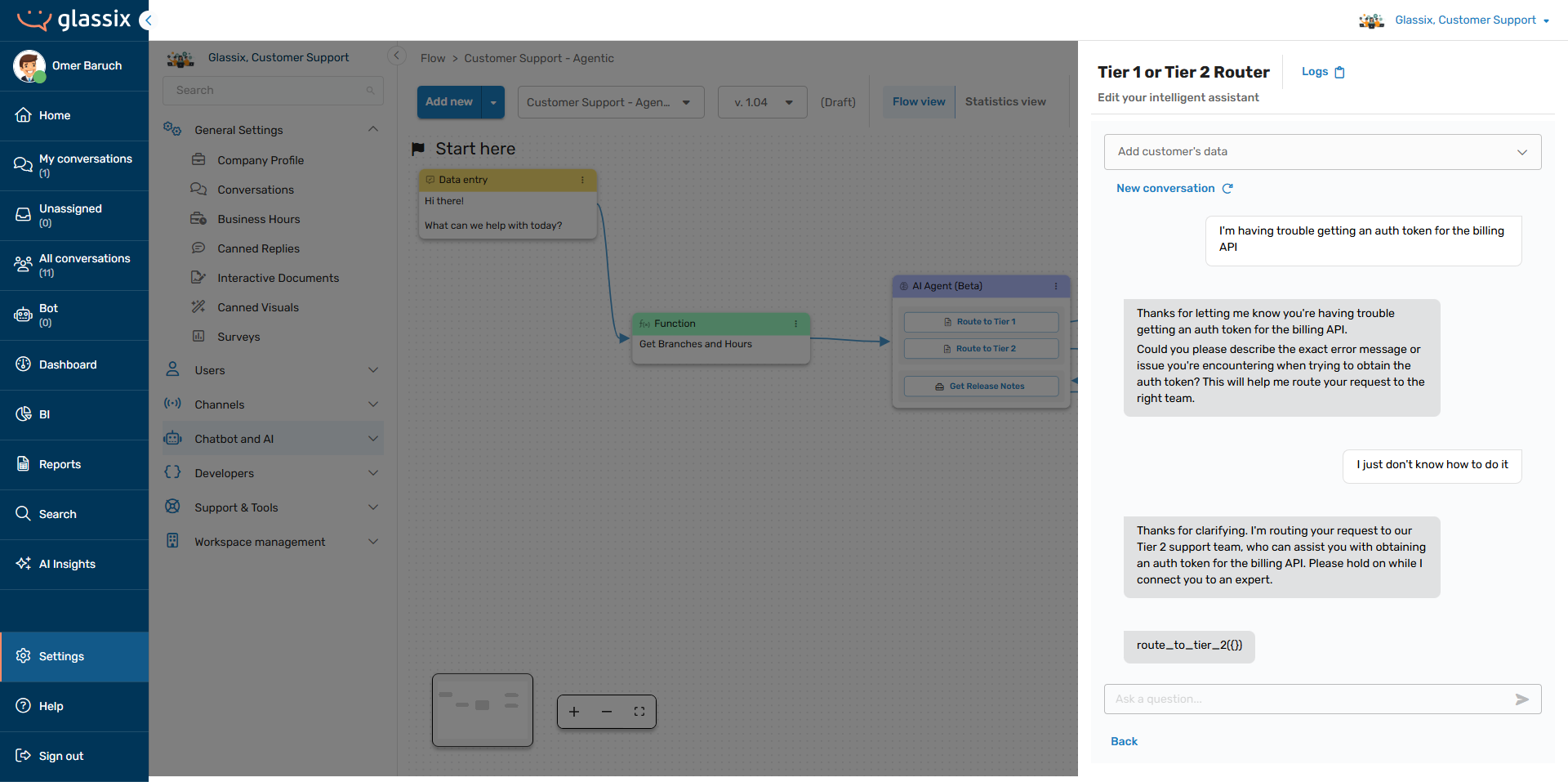

Testing Your Agent's Actions and Tools

The AI agent tester also allows you to view the specific action and tool choices your agent is making, along with the output parameters it's providing:

Action and tool choices will always be formatted in the following way:

action_or_tool_name({outputParam: value})

This is useful for testing your agent's action and tools choices, confirming that your agent is filling in parameters correctly, and for troubleshooting:

-

If your agent is choosing the wrong action or tool, this indicates that there is an issue with the action and tool descriptions.

Check the action or tool your agent chose in error, and compare its When to use description against the action or tool you wanted your agent to choose. See if the descriptions are similar and could both apply to the same situations.

-

If your agent is not choosing an action or tool at all, this indicates that there is an issue with the action and tool descriptions, or a conflict between the action/tool description and the behavior description prompt.

Check your behavior description prompt and make sure there are no instructions which would prevent your agent from choosing an action or tool. Absolute statements like "always do..." and "never do..." are the most likely culprits.

-

If your agent is choosing the correct action or tool, but is not filling in parameters correctly, this indicates that there is an issue with the parameter descriptions.

Check and make sure that your parameters' descriptions match the data you want your agent to fill into them.

- If the description match the data, it is also possible that the agent will need more instruction on how to fill the parameters correctly. See here for more information.

-

If your agent is not filling parameters at all, the parameters may be of the wrong type.

Check your parameter types and make sure they align with the data you want to fill into them. For example, if you want your agent to fill the parameter with a list of data, make sure your parameter is of the type StringArray and not (for example) of type Number.

If your actions or tools rely on input parameter filtering, read on to learn how to provide your agent with specific input data.

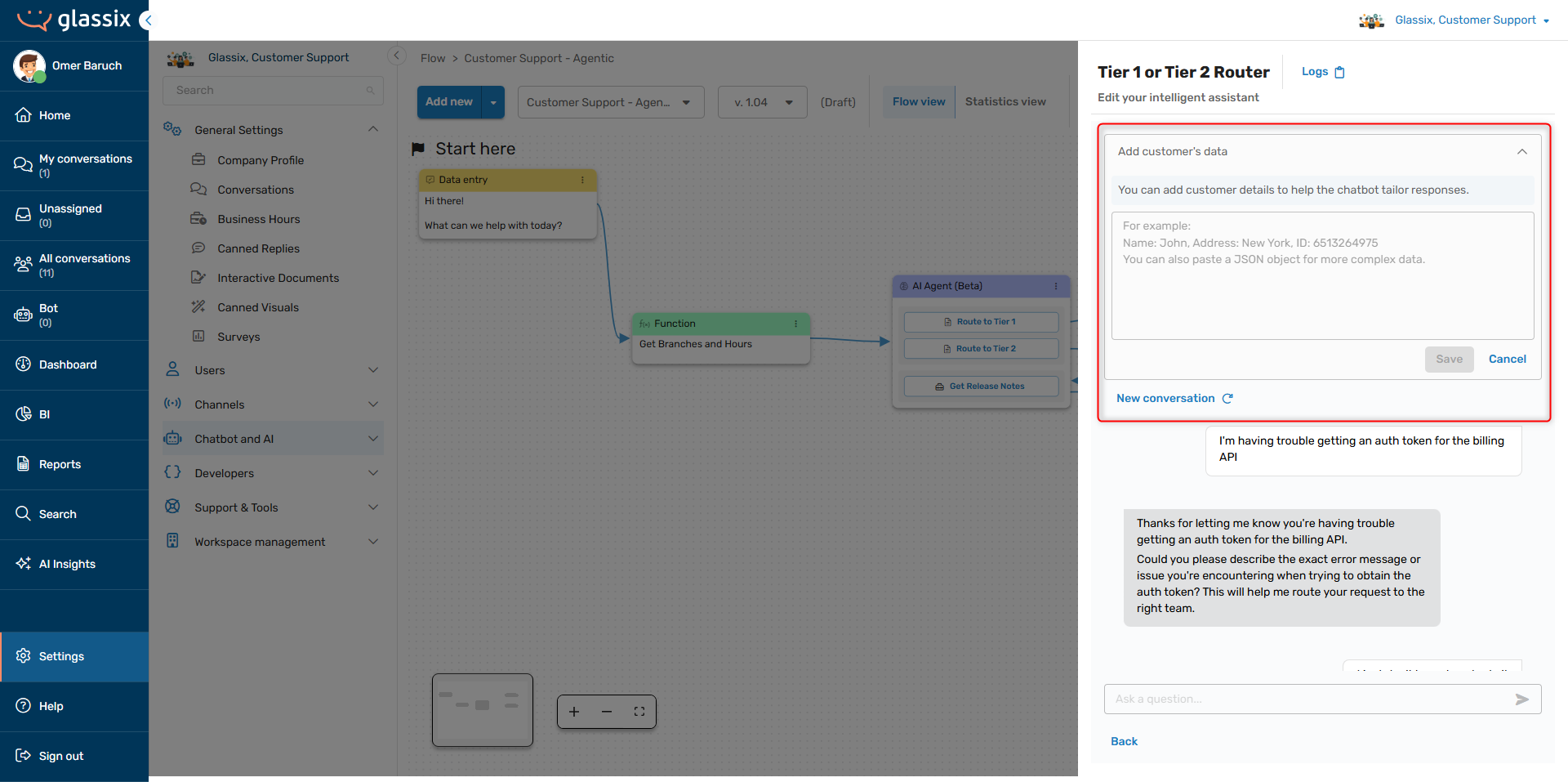

Testing Your Agent with Specific Input Data

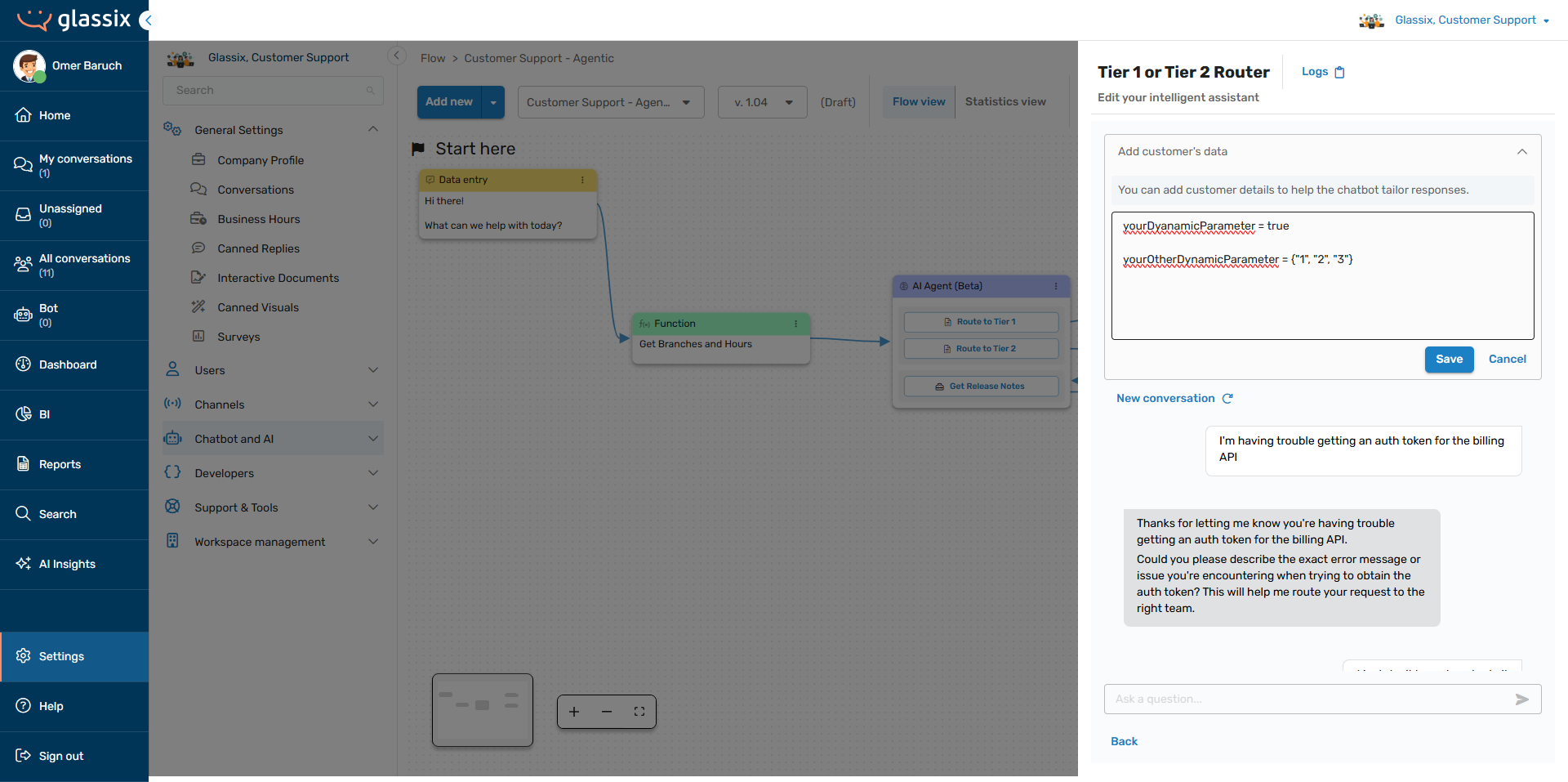

When you come across a problem with your agent that seems to only happen with certain inputs, or if you just want to see how your agent behaves with all its input parameters, you can use the Add customer's data field to provide your agent with specific input data:

This allows you to provide your agent with both structured and unstructured input data, similar to what it would receive in a flow.

Any data you provide in this field will be available to the agent throughout the entire test.

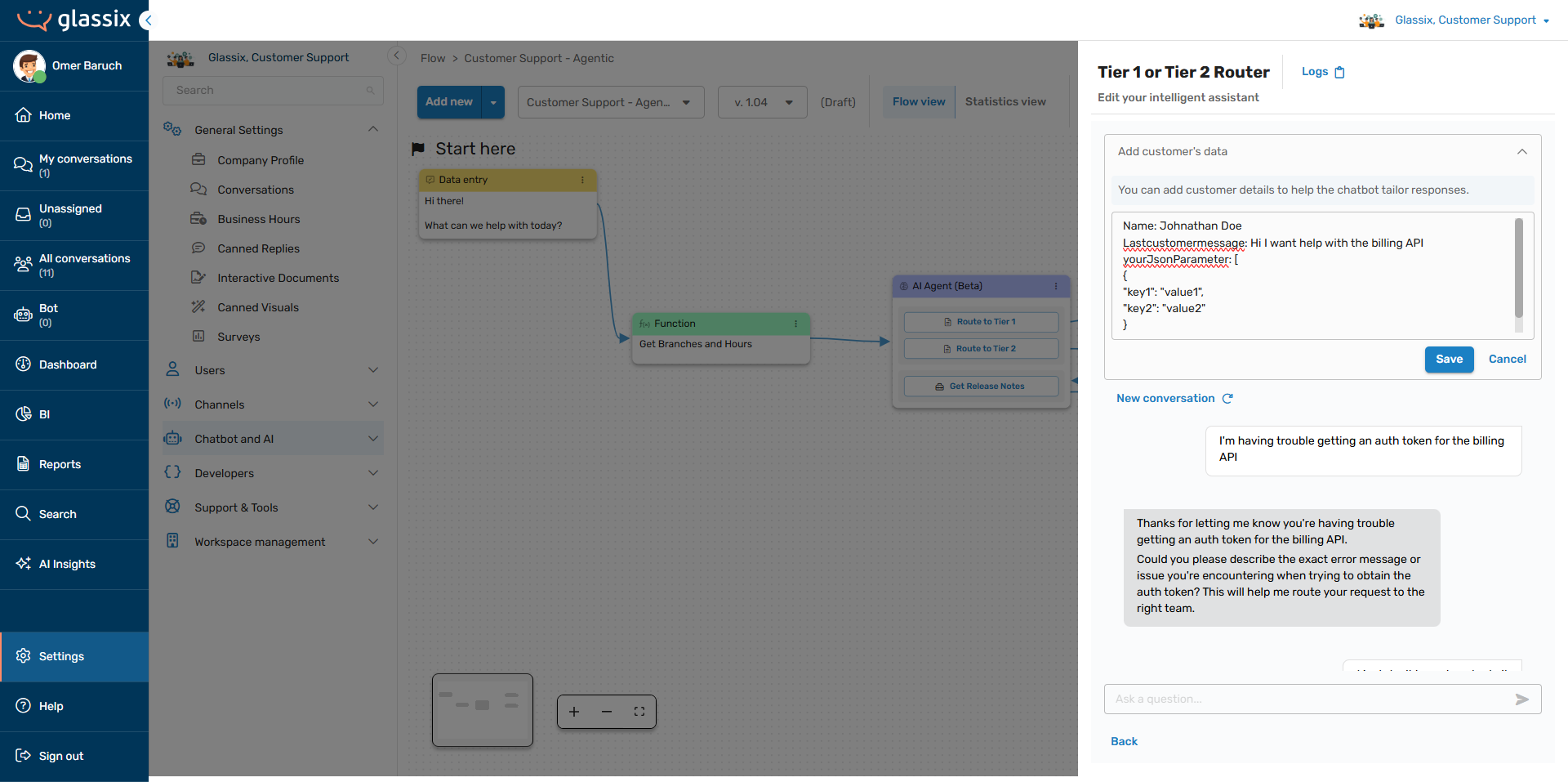

Tip:If you want to provide your agent with a previous message or messages for testing, for example to replicate previous messages in a flow, you can add a line like this to the customer data field:

lastcustomermessage: "Your message text here"

The agent understands the

lastcustomermessageparameter and will treat it like the previous message in the conversation.

While you don't have to follow a specific format when providing data to your agent (the AI will generally understand what you're telling it in this box), we recommend using a format like the below for the most consistent results:

You can also provide raw JSON to your agent in this field.

If you want to provide your agent with specific input parameters that are referenced by name in your behavior description prompt or in your actions and tools, define them by name in the customer data field. For example:

This is useful when testing how your agent responds with specific input data, input parameters, prior messages in the conversation, and for troubleshooting:

-

If your agent responds correctly until you provide it with a specific piece of data, this indicates that the agent has not been told explicitly enough how to handle the data you're providing it.

Check and make sure your agent has the instructions it needs to use the data you provide.

-

If your agent does not respond correctly when provided with specific prior messages, this indicates that the agent has not been given instructions for handling the situation or question in the message provided.

Check your behavior description prompt and your actions/tools to make sure you have explained to your agent how to handle the context of the problematic message.

-

If your agent starts getting confused after you've provided it with your JSON, this indicates that you may be overloading your agent with data.

See if there is anything unnecessary in the JSON you're providing and remove it from the relevant API call, or by using a function.

Once you've completed testing your agent using the tools above, it's time to add it to a flow and test the flow itself with the agent included.

Using the Flow Preview Button

Note:Tests from the AI agent tester and tests from the flow tester are likely to produce different results.

This is because tests from the AI agent tester do not have any conversation history context or input parameters (unless provided), while tests from the flow tester do.

More than any other flow, it's important to test any flow where you add your AI agent. The reason for this is that you need to test how your agent behaves when it's provided with the full set of messages and input parameters your flow will give it.

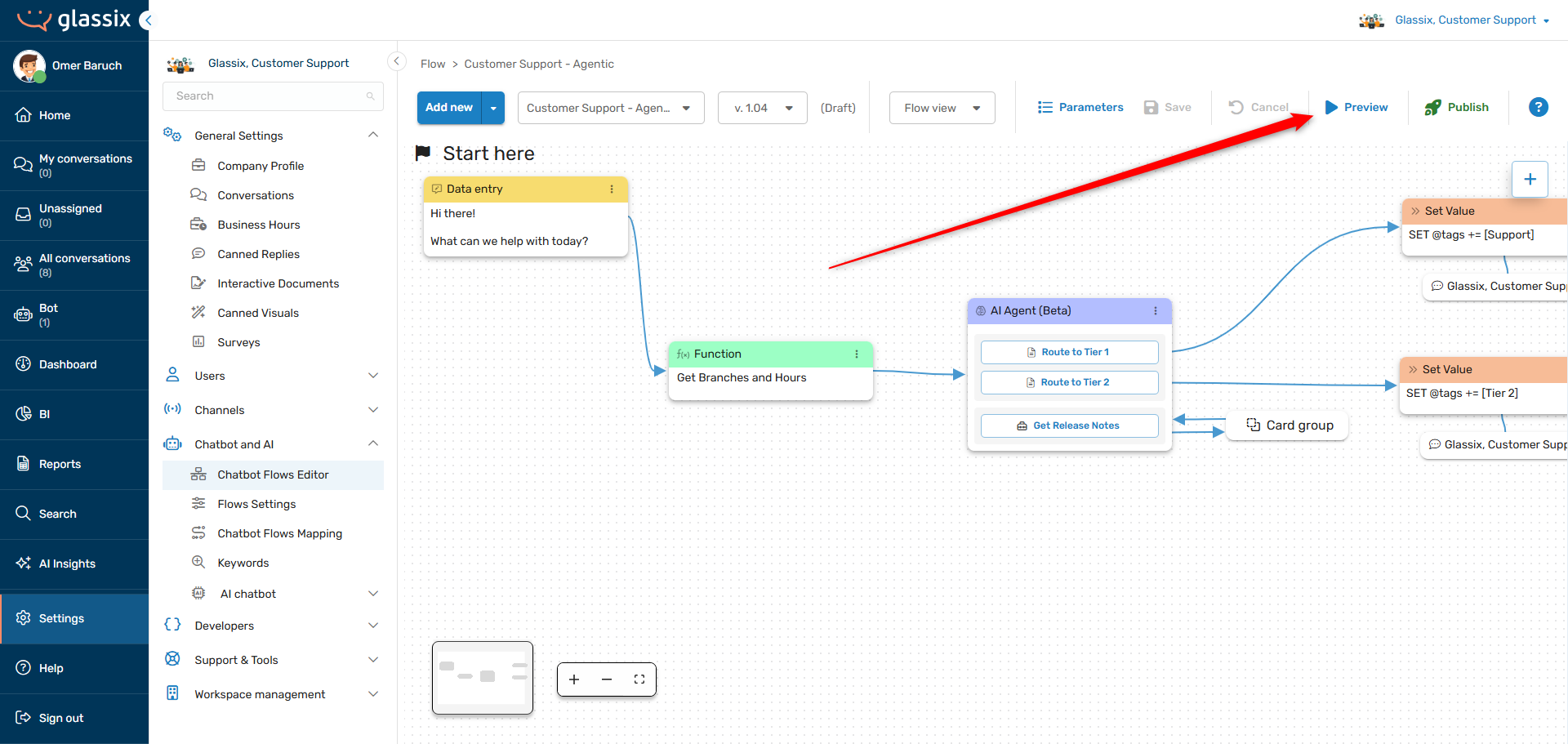

Once you've added your agent to a flow, you can test it just like any other flow by using the Preview Flow button:

If you find that your agent is behaving differently in your flow than it did in the AI agent tester, this indicates one or more of the following three issues:

- Your AI agent is having trouble understanding the conversation context.

- Your AI agent is having trouble understanding its input parameters.

- Your flow is not providing input parameters to your agent.

We recommend the following testing procedure if you run into this issue:

- Check and make sure your flow is actually providing input parameters to your agent (if relevant) by making sure those parameters are being filled somewhere in your flow prior to your AI Agent step.

- If any parameters are not being filled, make sure they get filled before reaching your agent and test again.

- Once you have confirmed all input parameters are being filled by your flow, return to the AI agent tester and provide it with all the input parameters in the customer data field.

-

If your agent has the same problem in the AI agent tester when it's provided with all its input parameters, one or more of the parameters are confusing it.

Try removing parameters until your agent behaves as expected - the last parameter you removed will be the one causing the issue. Then, follow the instructions in the link above to troubleshoot.

-

- If you have confirmed that the agent is behaving correctly with all its input parameters, try providing it with lastcustomermessage data from your flow. Add in each prior message from the flow until you run into a problem.

- Once you know which message is causing the issue, add some relevant instructions to either the behavior description prompt (if the agent is responding incorrectly) or to the relevant action/tool description (if the agent is not choosing the correct action or tool) in order to handle this scenario.

If you continue to run into any issues with your testing after trying the above steps, reach out to your implementation provider or Glassix contact for assistance.

Next Step

Now that you have tested your agent, it's time to Integrate into a Flow.

Updated about 1 month ago